Introduction

In the face of a dynamically changing financial world, an investment fund faced the challenge of modernizing its outdated data processing systems. The client, previously using solutions based on SSIS (SQL Server Integration Services), decided to switch to modern technology to improve operational efficiency and flexibility. The main goal was to integrate and process data from various sources, including stock market data, customer portfolio data, risk data and other sources to create a new reporting system. The choice fell on the Snowflake platform, valued for its cloud scalability and flexibility in handling data. The data loading platform ADF was used as the client infrastructure relies on Azure.

Problem

The key challenge was to process and integrate data from various file formats, including Excel files and ZIP archives, without the need for creating complex data transformations for each new source. The original plan involved hiring additional developers to redesign the system, however, the proposal of automating the data loading process seemed to be a more efficient and cost-saving solution.

Solution

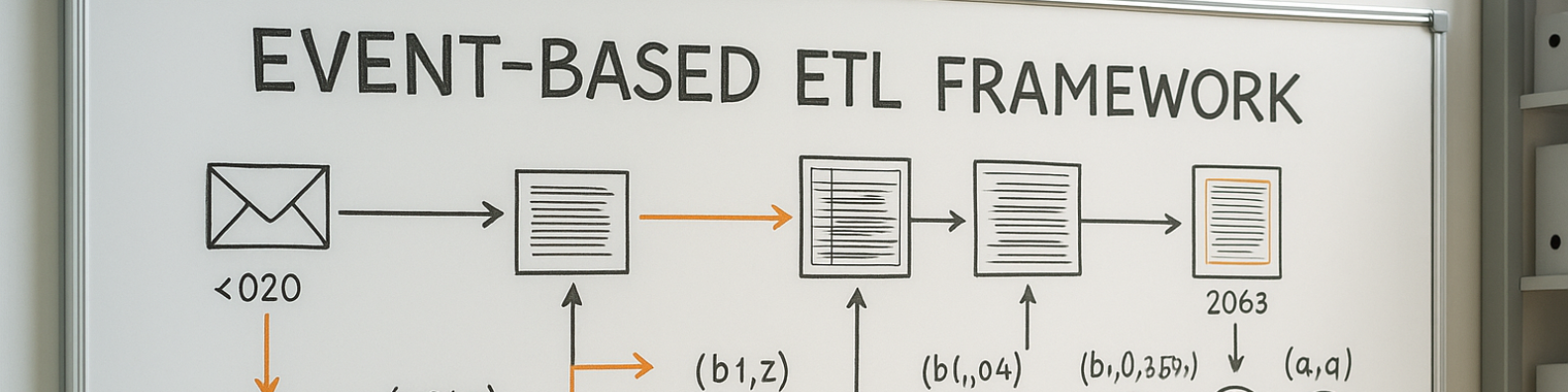

The proposed solution involved building a data loading schedule with limited bandwidth, using an event-driven architecture based on Azure Event Hub. This allowed for the immediate loading of available data without waiting for all files to appear, significantly reducing waiting time for resources and enabling flexible management of the data loading order. The system was designed to generate events based on the appearance of files, which in turn triggered further stages of data processing. Replacing the rigid loading schedule with an event system significantly increased the efficiency and scalability of the process.

For the project implementation, tools such as Azure SQL Database, Azure Data Factory, and Azure Event Hub were used. The use of Azure Data Factory enabled the standardization of communication and file mapping, simplifying the data loading process management. An interesting aspect of the project was the decision to stop using Informatica, which allowed the client to save money, showing that the selected Azure tools fully met the project’s needs.

Conclusions

The implementation of the new data processing system brought a series of benefits to the fund, including greater reliability, better control of load peaks, and savings in time and money thanks to process automation. One of the key conclusions is that a system built on an event-driven architecture allows for a quick response to potential errors, without blocking the entire system, which increases its resilience and efficiency. Thanks to the centralization of file loading definitions, any fix or modification is much simpler and faster, which translates into increased flexibility and scalability of the system.

This project serves as an example of effective data processing systems transformation, demonstrating how advanced technologies and an automation-based approach can bring significant operational and financial benefits in the financial sector.